We Tested 1,222 Emails Through ZeroBounce and Allegrow. Here's What Happened.

Catch-all domains are the blindspot of email verification. As we’ve shown on examples and presentations including comparisons of verifiers like Zerobounce like this in the past:

As you will have seen most providers treat them as a black box—returning"accept_all" or "catch-all" and calling it a day. But when 30-50% of your B2B email list sits on catch-all domains, that's not verification. That's guesswork.

Now seeing as providers are developing methods and new features to verify catch-all emails. We’ve gone to the steps to create a new full test process you can run and shared the results of over 1,000 emails being verified by two market leading providers. Allegrow results are compared against all of the relevant Zerobounce options offered in 2026 so far.

In todays guide you’ll see the stats and expected accuracy of each option and an outline how to create your own test, plus as a bonus, you can request the raw results of the test we’ve run.

The Test Design

We created a dataset of 1,222email addresses across catch-all domains including ABM, Avon, Bank of England,BD, BDO, Bessemer, BP, Copperpoint, Eaton, Fidelity, FICO, GoPro, Grey, Harness, HCLTech, Hiscox, Honeywell, Intact, Kiewit, Nvidia, Optimizely, and Sage. The list contained two types of contacts:

989 fictional character emails (80.9%) — fake email addresses using obvious fictional character names. Think hermione.granger@, leia.organa@, derek.shepherd@, and similar patterns across these domains. These people don't work at these companies. Any verification tool marking them as "valid" is making an error.

233 real person permutations (19.1%) — email format variations for 23 verified business professionals atthese same catch-all domains. For each real contact, we generated approximately10 common email permutations: firstname.lastname@, flastname@,firstname_lastname@, f.lastname@, and so on. These were sourced as employees from public data on those companies employees for the purpose of the example.However, when you do this yourself it’s vital to include records you’ve communicated with and seen replies from as the seeded valid emails.

Why Test Multiple Permutations?

B2B data providers don't always know the exact email format a company uses. They generate multiple permutations and rely on verification to identify which one is correct. A good verification tool should:

1. Identify which permutation(s) actually reach the real person

2. Reject permutations that don't resolve to a valid mailbox

3. Reject all fictional character emails entirely

The ideal result? Find all 23real people (at least one valid permutation each) Mark all 989 fictional emails as risky or invalid.

Why This Methodology Works

Most verification comparisons use lists where nobody knows the true answer. Did that email bounce because it's invalid, or because of a temporary server issue?Was that "risky" rating correct, or overly cautious? Without ground truth, you're just comparing high-level "valid" percentages between providers—not actual accuracy.

To properly test verification quality, you need contacts where you already know the expected outcome from a reliable source. The gold standard is email replies: if someone responded to your outreach last week, you know that address works. Seeding your test list with these known-valid contacts—alongside known-invalid addresses—lets you measure what actually matters: does the tool correctly identify the emails you can trust?

Our approach eliminates ambiguity. We know exactly which contacts are real and which are fictional.There's no interpretation required—just counting.

One limitation worth noting: This test is based entirely on publicly available data and is intended as an educational example. We did not seed contacts based on email reply data purely because we can't make data in people who have replied to our emails public. (Although in the test you create each provider won’t have the replies that you do internally, so it’s fair game to include them in the sample). Instead our test mirrors a B2B data providers use-case: generating multiple format variations for a known contact and relying on verification to identify which permutation is correct.

If you want to test verification for your own outreach lists, seeding with recent reply data will give you the most accurate benchmark.

Zerobounce Standard Results (1 Credit Per Contact + Fastest Option)

ZeroBounce processed the list quickly and returned results. The problem wasn't speed—it was accuracy and coverage.

‘False Positive Rate Estimate’ (based in this example)is the number/percentage of fictional emails we generated using AI at these domains which were marked with the ZB status valid.

‘Real Contacts Found’ (based in this example) is the number of person records where ZeroBounce was able to identify at least 1permutation with a ZB status valid.

*The exact price could vary this is based on the starting per credit price advertised of $0.01 per credit for the pay as you go 2K credit bundle.

The Catch-All Problem

As an example both abm.com andbd.com are catch-all domains. ZeroBounce returned "valid" with sub-status "accept_all" for these addresses. That status means: we can't tell if this is real, but the domain accepts everything, so we'll call it valid.

The result? Zerobounce couldn't distinguish between:

• p********m********@abm.com— a real business professional

• hermione.granger@abm.com— a Harry Potter character

• leia.organa@abm.com — a Star Wars character

All three received the ZBStatus "valid" with the ZB Sub status “accept_all”. That's 80 emails we expect are fictional characters marked with either a valid or inconclusive status depending on how you interpret this. These emails could either bounce, and/or silently get forwarded to a group account on the company domain (due to catch-all configuration) e.g. info@company.com.

Meanwhile, Zerobounce didn’t identify 7 of out of 23 person records where we expect at least one of the 10 permutations per contact had a real email. That's 30% of expected valid business professionals lost from your dataset or with no email found.

As a reminder, for the real publicly listed person profiles there were 23people records with 233 variations of potential emails across all of them in total.

Allegrow Results (High accuracy)

Allegrow identified all 23 real contacts and rejected 988 of 989 fictional addresses. This a very high rate of sourcing valid permutations on the enterprise domains included in the same and a very low expected error rate. The time for processing was longer as verifying enterprise emails takes more time. While it’s worth noting CSV uploads have a different priority speed in the Allegrow product compared to API usage (an API customer could complete these requests as quickly as 62 seconds).

Details on the Allegrow Error

The one fictional contact Allegrow marked as valid was the name derived from Rachel Green from Friends(TV show).

Here's the thing: "RachelGreen" is a common name. When we investigated, we found there actually is someone named Rachel Green working at the company domain this permutation was attempted on. (The example can be shared on request here).

Therefore this might not be a false positive. It's the verification working correctly to identify a real person with this common name at a very large company, even though we created itas a "fictional" test case.

Compare this to ZeroBounce, markinghermione.granger@abm.com and leia.organa@abm.com with the ZB Status “valid”.There's no Hermione Granger at ABM. There's no Leia Organa.

Head-to-Head Comparison

The numbers tell a clear story. Allegrow found 30% more valid contacts while producing 80x fewer false positives. While in this example Zerobounce had the edge on speed and was twice as fast.

Pricing for both vendors is likely to be rather different (per credit) at scale, the same goes for speed, as Allegrow mentions custom capacity for large scale users (providing faster responses for the API version of the product).

What About Zerobounce's Catch-All Scoring? (Up to 2 Credits Per Contact)

Zerobounce offers a premium catch-all scoring feature that is advertised as costing an additional credit per catch-all address it grades. Here’s how you opt-in to using it on upload or if you use the API, it’s a separate endpoint.

.png)

However, it’s harder to benchmark and in our opinion use this data as rather than a status the score correlates to a percentage chance to bounce as shown here:

Therefore, in this example we choose to make the assumption that anything with a QS of 8 or above should be considered valid. Although, this might be different to the way you choose to interpret the score.

We tested the same dataset with this feature enabled and have re-evaluated the results below.

Therefore, with this additional feature activated 1additional false positive was included (QS: 8) in the returned data and one additional real person record was resolved to valid (QS:10).

The premium feature in this example could costs 44%more than the standard version. For that increase, you get around a 4%improvement in real contacts found—while the false positive rate can actually increase depending on how you treat those quality scores.

The Quality Score Problem

ZeroBounce's catch-all scoringreturns a Quality Score from 0-10, where 10 means "1.3% chance of bouncing."Here's how it performed on the test data:

A fictional TV character scored 8/10 while six expected real business professionals emails scored 0/10.

The premium scoring recovered only 1 of the 7 contacts missed by the standard version—while incurring additional fees and potentially increasing the error rate.

It’s important to note thatthere’s the possibility for the fictional character mentioned ‘Meredith Grey’,could be a person record, similar to what occurred on Allegrow with ‘ReachelGreen’. However, we included both in each calculated error rate respectivelyfor a balanced approach. (We could not find any public record of a MeredithGrey at the company in question).

What About Zerobounce's New Verify+ Feature? (Explicit opt-in required, but no additional fee)

ZeroBounce offers an additional feature called Verify+ that runs as a "phase two" verification process. After initial standard verification. Verify+ spends up to 48 hourssending actual test emails to contacts previously labeled as "catch-all"and uses bounce-back responses to attempt further validation.

How Verify+ Works

This feature requires explicit opt-in consent. When enabling Verify+, ZeroBounce displays a disclaimer acknowledging that test emails will be sent to your contacts. There's no additional cost, but you're authorizing them to conduct email-based verification on your behalf.

We tested Verify+ using the same test dataset from our earlier comparison. The results below reflect thePhase 2 output combined with the results from the Zerobounce standard verification. If you use the feature, the standard part will become available on it’s own before the Verify+ results.

Catch-all domains processed by Verify+: bdo.com, dukehealth.org, eaton.com, grey.com, harness.io, hcltech.com, intact.net, kiewit.com, optimizely.com, sage.com

Total emails processed inPhase 2: 381

Verify+ Results

The False Positive Problem

Verify+ marked a significant number of fictional character emails as valid creating a false positive rate of33.1%, which are all expected errors/synthetically generated fake contacts.That's one in three fake emails incorrectly marked as valid.

Examples of fictional characters marked valid:

• TheMatrix at hcltech.com: morpheus.matrix, trinity.matrix

• StarWars at dukehealth.org: han.solo, anakin.skywalker, padme.amidala,obi.kenobi

• Game of Thrones at other domains: jon.snow, arya.stark, tyrion.lannister,daenerys.targaryen

There is unlikely to be a person called Morpheus Matrix working at HCLTech. Or a Trinity Matrix. There’s the possibility of ambiguous cases like common names that might coincidentally match real employees in this type of test data-set — But, these are unmistakably fictional characters that demonstrate a real issue with using email sending to catch-all domains as a method to reliably verify emails.

Why Does This Happen?

The fundamental flaw in email-send verification is the assumption that"no bounce = valid address." This isn't true. Here’s a video test Allegrow did previously to prove this logic is flawed:

As you can see enterprise email systems—Office 365, Google Workspace, and Secure Email Gateways—are frequently configured by IT administrators to suppress Non-Delivery Reports (NDRs) for security reasons. Instead of showing bouncing, emails to non-existent addresses are silently rerouted to shared inboxes like info@ or quarantined.

This creates dangerous false signals. You send to what Verify+ told you was a valid address, but your emails are landing in IT-monitored catch-all inboxes—or worse, being flagged as spam.You're operating under the assumption these contacts are valid and marketable when they're actually silent failures damaging your sender reputation.

Allegrow doesn't use email sending for catch-all verification precisely because of this limitation. Our signal-based verification detects contacts that look valid but would silently fail—helping you avoid false positives without the risks of test email delivery.

Why Verify+ Missed a Small Portion of Real Person Records

Verify+ improved real contact detection from 74% to 96%, finding 22 of 23 expected real person record contacts. However, there’s still a gap in coverage comparatively (even with the increased expected error rate).

The reason: Verify+ only processes contacts previously labeled as "catch-all." One of our valid contact's email permutations was labeled "unknown" in the initial verification pass. Since it wasn't flagged as catch-all, Verify+ never attempted the second-phase email verification. That contact remained"unknown" in the final results—still missing from your usable list.

As a final side note: We also anticipate at Allegrow that it’s possible for valid contacts to receive bounce backs to test sending in general and NDR codes aren’t always transparent about the reason for a bounce back. Which is another reason we generally disagree with the approach of sending test emails for verification purposes.

Final Comparison: All Four Options Results

Using the same test dataset of1,222 emails (989 fictional, 233 real person permutations across 23 person records), here's how all four verification approaches compare:

ZeroBounce Verify+ achieves near-complete real contact detection at 96%. But from this sample, it looks like that comes at the cost of one in three fictional characters beingin correctly marked as valid. While, other Zerobounce options (standard andAI scoring), provide lower false positive rates of slightly above 8%, at the cost of lower coverage and success at finding valid contacts.

Allegrow (only 1version/option) achieves 100% real contact detection with a 0.1% false positive rate (at filtering out fake emails), while still finding every real contact.That's an accuracy increase of more than 30% on this sample, with full coverage of valid within minutes (no extra features or 2 day wait needed). From a pricing standpoint, Allegrow does have a lower cost per contact, but offers packages as recurring subscriptions rather than a pay-as-you-go option. This is due to Allegrow’s focus on the most serious and large scale email verification users.

It’s important to note this data was exclusively B2B emails, whereas we expect Zerobounce’s offering includes many features for B2C emails.

Note on pricing estimates - Test conducted February 2026. Pricing reflects estimated rates at time of testing. Each provider will likely offer different pricing for large volumes, therefore, the starting rate published on each providers site was used. Results of your own test will vary based on list composition and domain mix. This is an example of our test for you to learn to conduct your own.

Understanding the Results

ZeroBounce Status Meanings

Zerobounce’s own documentation should be referenced for up to date guidance and full details, however, based on our current understanding for initial context here’s a summary of the statuses we saw:

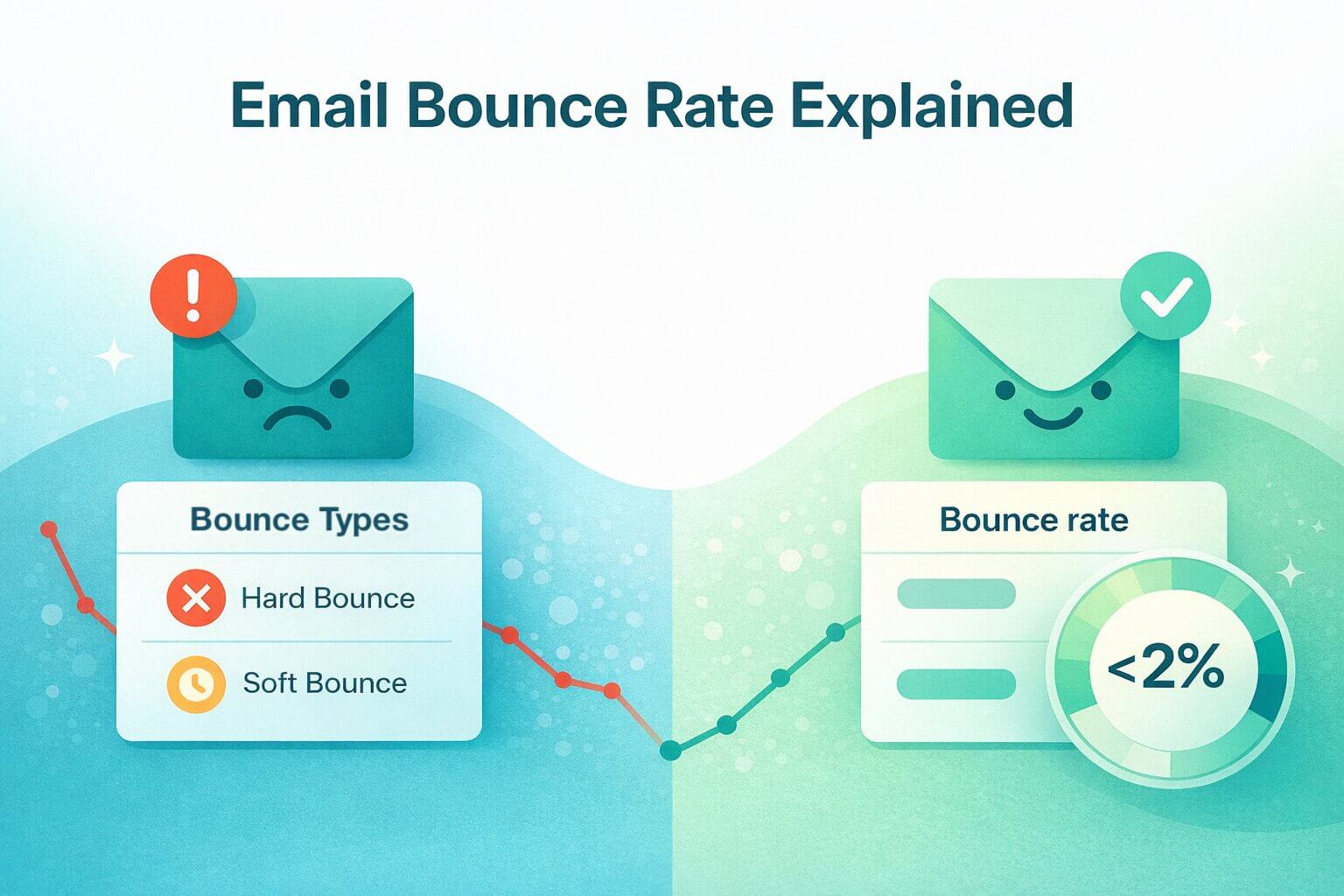

· valid – Deliverable addresses with bounce rates under 2%. Safe to email.

· invalid – Undeliverable. The mailbox doesn't exist, the domain has no mail records, or the address is otherwise unreachable. Remove these.

· catch-all – The server accepts everything without confirming whether the specific mailbox exists. Deliverability is a coin toss. Segment and monitor if you mail them.

· spamtrap – Suspected honeypot addresses used to identify spammers. Emailing these damages your sender reputation. Don't mail.

· abuse – Recipients with a history of marking emails as spam. High risk.

· do_not_mail – Technically reachable but risky for various reasons: disposable/temporary domains, role-based addresses(info@, sales@), known litigators, or addresses flagged on global suppression lists. Mailing these typically hurts deliverability or invites complaints.

· unknown – Validation couldn't complete(server offline, anti-spam blocking, timeout, etc.). Historically about 80% of these turn out to be invalid. Worth re-validating before deciding.

Allegrow Status Meanings

Allegrow’s full up to date statuses are updated on API documentation or help center, but this provides a summary overview for context:

· 'safe' = valid(safe to send to / provide customers, both catch-alls and non-catch-alls included)

· 'block_bounce_risk' = invalid

· 'dead_email' = invalid (often won't bounce even though it's incorrect, typically due to a server catch-all set-up)

· 'do_not_mail_abuse' = valid (with higher spam report risk)

· 'some_risk' = inconclusive (re-run after 30 days)

· 'spamtrap' =valid (but high spam trap risk)

· 'more_time_required' = (*sync endpoint only* more checks are still being run).Either retry later or upgrade to the asynchronous API to avoid this.

· 'missing_email' = invalid (there's likely a syntax error on the email, making it invalid or not correctly structured as an email).

Build Your Own Test

We've shown our methodology.Here's how to create your own verification comparison:

Step 1: Select catch-all domains

Choose 5-10 companies you know use catch-all email configurations. These are typically larger enterprises.

Step 2: Plant real contacts

Include email addresses of people you've actually engaged with—colleagues, clients, or contacts who've replied to your emails. You need ground truth.

Step 3: Create fictional contacts / known expected invalids

Use character names that couldn't possibly be real employees: fictional characters, historical figuresat modern companies, or absurd combinations. Or make these contacts even moreunder the radar by generating fully random names or making small changes tovalid contacts like adding an extra/different letter to them.

Step 4: Run both providers

Upload the same list to each service and compare results.

Step 5: Calculate your metrics

• FalsePositive Rate = Fictional emails marked valid / Total fictional emails

• Real Contact Rate = Real emails marked valid / Total real emails

Get the Full Test Data

Want to review our complete test methodology and results? We can share the full dataset for reference with companies serious about verification accuracy.

What you'll receive:

• Complete side-by-side comparison data

• Detailed breakdown by domain and status

• Guide to creating your own catch-all verification test

• 1,000 free Allegrow credits to run your own comparison

To request access: Fill in this form to schedule a call with our team. We'll walk through the methodology, answer questions about your specific use case, and share the full dataset via email.

We require a brief conversation before sharing the data to ensure proper handling of the contact information included in the test set.

For Data Providers: Run Your Own Comparison

If you're a B2B data provider looking to benchmark verification accuracy, we've built resources specifically for you.

Our catch-all testing guide here includes:

• Step-by-step methodology for creating statistically valid test sets

• LLM prompts to quickly generate synthetic fictional contacts at scale

• Best practices for planting verifiable real contacts

• Frameworks for measuring and comparing provider performance

To get started: Contact Allegrow's API team to discuss test access and receive 10,000 credits to run a comparison test with your own data. We'll help you design a test that reflects your actual list composition and use case. While also walk you through our pricing options for these types of data partnerships with preferential rates.

%20(1).jpg)